Feel & Drive

let your feelings drive you

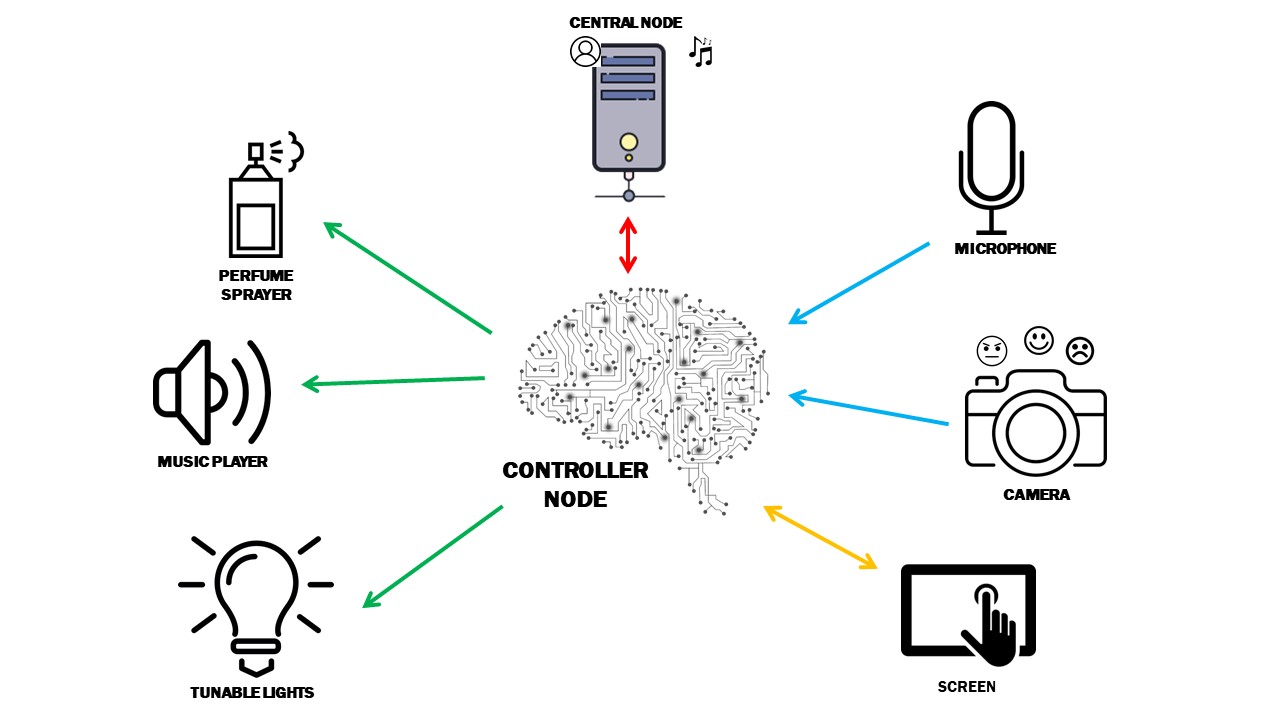

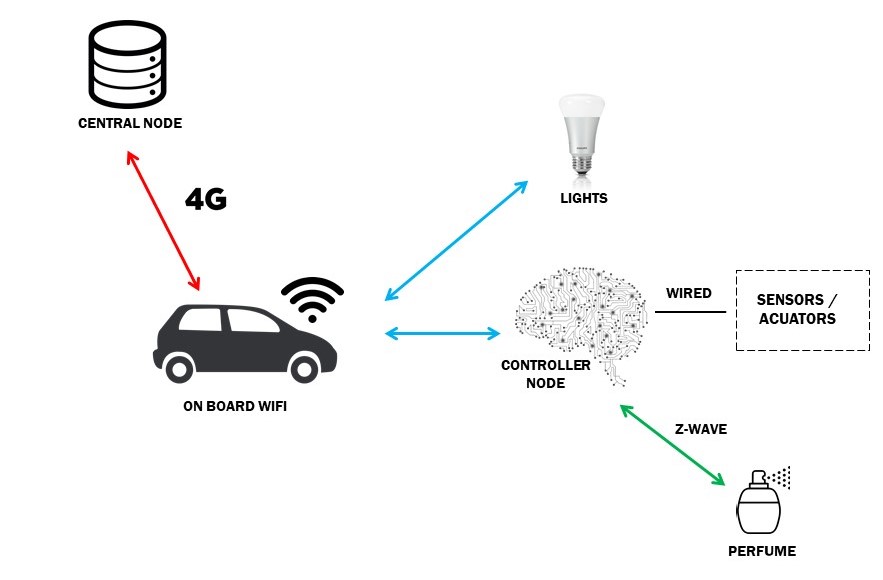

Feel & Drive is concerned with making car driving as secure and comfortable as possible by dealing with the negative effects that some range of emotions can have on the driver. While he is driving the system continuously analyses his feelings through a camera: if he is detected as upset or angry the system will become proactive, tweaking the cockpit lights colour, the music and the ambient scent to gently guide him toward a happier and healthier mood.

In particular, the following known effects on mood are exploited:

| # | AREA | FEATURE | DESCRIPTION |

|---|---|---|---|

| #1 | Ambient Tuning | Light tuning | Lights will change color depending on the mood |

| #2 | Ambient Tuning | Adaptative Music Player | Music will change depending on the mood, to make the user feel more comfortable |

| #3 | Emotion Prediction | Facial Emotion Recognition | The system will understand the mood of the user and will adapt to it through facial expressions recognition |

| #4 | User Interaction | Vocal Requests | The user can interact with the system vocally, asking to modify music, lights or perfumes |

| #5 | User Info | User Music Database | A personalized music database will be created, according to each mood recognised |

| #6 | User Info | User Login | Using a screen, the user can log in to the system |

| #7 | System | Remote Database | The database of user profiles can be made remote, in order to make this system shareable |

| # | AREA | FEATURE | DESCRIPTION |

|---|---|---|---|

| #8 | Ambient Tuning | Perfume tuning | Depending on the mood, perfume will be sprayed to comfort the user |

| #9 | User Interaction | Vocal Interaction | The user can tag/untag his favourite songs through vocal commands |

| #10 | User Info | Favourite Colour | During the registration, the user can choose his favourite colour to be used when he is happy |

| # | AREA | FEATURE | DESCRIPTION |

|---|---|---|---|

| #11 | Ambient Tuning | Focus Assistant | Two opposite colours will alternate to keep the driver focused |

| #12 | Emotion Prediction | Improved Emotion Recognition | A skin galvanic resistance will be used to better understand his level of stress and emotional arousal |

| #13 | User Interaction | Hot-Word Trigger | The system will continuously listen in background and will be triggered with hot-words |

| #14 | System | User Learning | The system is able to learn from user choices and feedback in order to improve |

| #15 | System | Music client | The system will rely on an official music server to retrieve a wider music catalogue |